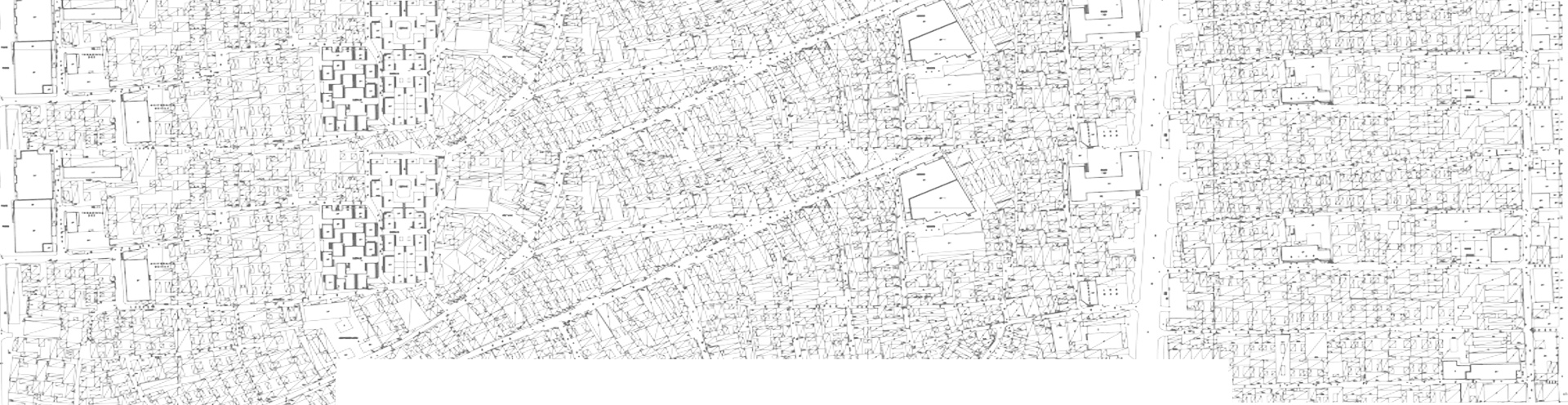

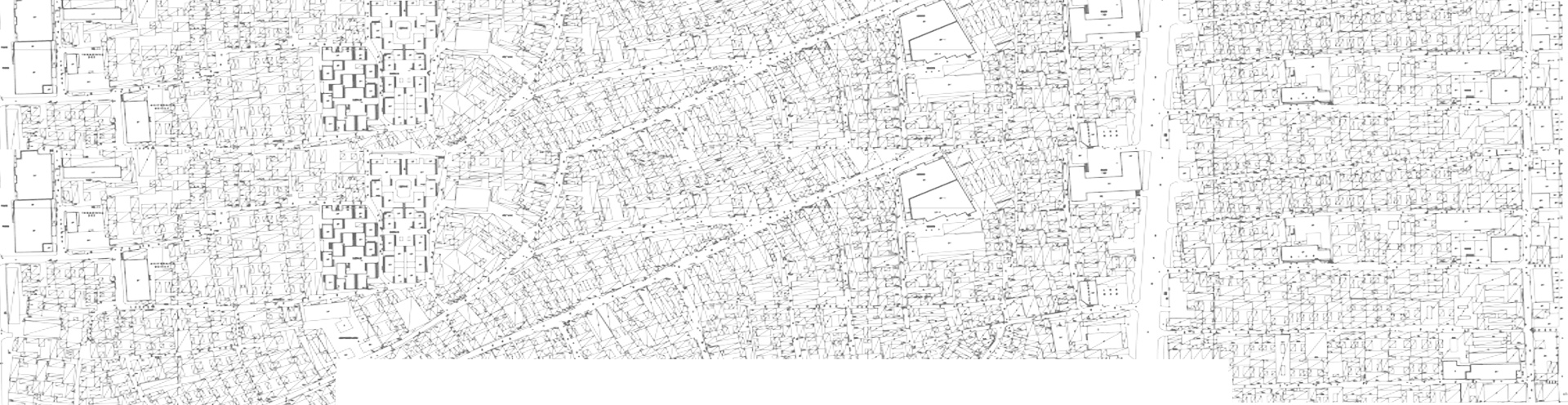

ABSTRACT摘要 Spatial networks have long been known for their internal spatial order or beauty (Hillier, 2007) and in the field spatial computation and analytics we employ a number of graph based methodologies in order to understand or extract intrinsic attributes of the urban fabric around us. In this research we take a complete different approach by looking at urban structure through the use of deep convolutional variational autoencoders with interesting results.空间网络因其内在的空间秩序或美感而闻名(希利尔, 2007),在空间计算和分析领域,我们采用了许多基于图形的方法,以理解或提取我们周围城市结构的内在属性。在本研究中,我们采用了一种完全不同的方法,通过使用深度卷积变分自动编码器来观察城市结构,得到了有趣的结果。 Autoencoders are an unsupervised learning technique in which we employ neural networks for the task of representation learning. Specifically, a neural network architecture imposes a bottleneck in the network which forces a compressed and generalization of the knowledge representation of the structure of space. This non-linear compression and subsequent reconstruction creates a unique set of features that are inherent of urban space. Our network is multiple layers deep in order to be able to encode basic spatial complexity and is build based on a convolutional network architecture which is inspired by biological processes similar to the connectivity pattern between neurons that resembles the organization of the animal visual cortex. Artificial neurons respond to real urban networks of London in a restricted region of the visual field, which partially overlap such that they cover the entire convolutional visual field. Convolutional layers apply a convolution operation to the input, passing the result to the next layer. Each convolutional neuron processes data only for its receptive field but the cascading nature of our network build a knowledge of more complex spatial relations as data move deeper. While convolutional neural networks are extensively used in supervised image classification the work presented in this papers is completely unsupervised.自动编码器是一种无人管理的学习技术,其中我们使用神经网络来完成表示学习的任务。具体而言,神经网络体系结构在网络中施加了瓶颈,迫使对空间结构的知识表示进行压缩和泛化。这种非线性压缩和随后的重建创造了一套独特的城市空间固有的特征。我们的网络是多层的,以便能够编码基本的空间复杂性,它是基于卷积网络架构构建的,该架构的灵感来自生物过程,类似于神经元之间的连通性模式,类似于动物视觉皮层的组织。人工神经元对伦敦的真实城市网络在视野的限定区域内做出反应,这些区域部分重叠,从而覆盖整个卷积的视野。卷积层对输入进行卷积运算,并将结果传递给下一层。每个卷积神经元只处理它的接收域的数据,但随着数据的深入,我们网络的层叠性质构建了更复杂空间关系的知识。卷积神经网络在有监督图像分类中得到了广泛的应用。而本文所做的工作是完全无人运行的。 In this paper we present the complete architecture of the deep convolutional network that was trained for months using data from the city of London, concluding with two significant outcomes extracted from the variational encoding and decoding processes. A non-linear clustering, or neural aggregation, of urban space based on the learned features and a generative urban network output based on the variational synthesis we call machine intuition.在本文中,我们展示了深度卷积网络的完整架构,该网络使用来自伦敦市的数据进行了数月的计算,最后从变分编码和解码过程中提取了两个重要的结果。基于所学特征的城市空间的非线性聚类或神经聚合,以及基于变分综合的生成式城市网络输出,我们称之为机器直觉。

2020-12-24REFERENCES参考文献 Bourlard, H. and Kamp, Y. (1988). Auto-association by multilayer perceptrons and singular value decomposition. Biological Cybernetics, 59, 291–294. Bojarski, M., Del Testa, D., Dworakowski, D., Firner, B., Flepp, B., Goyal, P., Zhang, X. (2016). End to end learning for self-driving cars. arXiv preprint arXiv:1604.07316. Doersch, C. (2016). Tutorial on variational autoencoders. arXiv preprint arXiv:1606.05908. DiCarlo, J. J. (2013). Mechanisms underlying visual object recognition: Humans vs. neurons vs. machines. NIPS Tutorial. Everitt, BS (1984). An Introduction to Latent Variables Models. Chapman & Hall. ISBN 978- 9401089548. Fukushima, K. (1980). Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biological Cybernetics, 36, 193–202. Gibson, J. (1979), The Ecological Approach to Visual Perception, Boston: Houghton Mifflin. Hinton, G. E. and Zemel, R. S. (1994). Autoencoders, minimum description length, and Helmholtz free energy. In NIPS’1993 . Hinton, G. E. and Salakhutdinov, R. (2006). Reducing the dimensionality of data with neural networks. Science, 313(5786), 504–507. Hillier, Bill. Space is the machine: a configurational theory of architecture. Space Syntax, 2007. Hubel, D. H. and Wiesel, T. N. (1959). Receptive fields of single neurons in the cat’s striate cortex. Journal of Physiology, 148, 574–591. Kingma, D. P. (2013). Fast gradient-based inference with continuous latent variable models in auxiliary form. Technical report, arxiv:1306.0733. Kingma, D. P. and Welling, M. (2014). Auto-encoding variational bayes. In Proceedings of the International Conference on Learning Representations (ICLR). LeCun, Y., Bottou, L., Bengio, Y., and Haffner, P. (1998b). Gradient based learning applied to document recognition. Proc. IEEE. LeCun, Y. (1989). Generalization and network design strategies. Technical Report CRG-TR-89-4, University of Toronto. Rezende, D. J., Mohamed, S., and Wierstra, D. (2014). Stochastic backpropagation and approximate inference in deep generative models. In ICML’2014. Preprint: arXiv:1401.4082. Sparkes, B. (1996). The Red and the Black: Studies in Greek Pottery. Routledge Tandy, D. W. (1997). Works and Days: A Translation and Commentary for the Social Sciences. University of California Press. Varoudis, T. (2012). depthmapX - Multi-Platform Spatial Network Analyses Software. OpenSource. Yu D., Yao K., Su H., Li G. and F. Seide, KL-divergence regularized deep neural network adaptation for improved large vocabulary speech recognition, 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, 2013, pp. 7893-7897. Zeiler, Matthew D., Dilip Krishnan, Graham W. Taylor, and Rob Fergus. "Deconvolutional networks." (2010): 2528-2535.

2020-12-24